When running a SQL server, it’s handy to let it tell you how things are going, rather than having to go look at the logs every so often and see if anything’s broken.

Here are the steps to do this. In this case, I have a large number of clients. To standardize, I setup a single GMail account to use for the outgoing email notifications for all the servers I manage. If you have an internal mail server or are using something other than GMail, you’re a big kid now - plug your settings in.

Tasks:

- Setup your mail account

- Setup Database mail

- Setup Operators

- Setup Alerts

- Maintenance Plan Failure notification

- Testing / Debugging

- Resources

Note: These settings are Instance specific, so you'll need to do this for EACH instance.

Setup your Mail Account

Setup your GMail account. Verify that you can send / receive emails.

Setup Database Mail

Setup Mail Profile

- SQL Mgt Studio

- Management Folder / Database Mail / Right Click / Configure

- Next / Setup up DB Mail / Next (If prompted to enable Database Mail, choose Yes)

- Profile Name: Alert Notifications

- Add new SMTP Account

- Account name: {Descriptive name of your email user}

- Email address: {yourmail@server.com}

- Display Name: SQL Alert – {Server \ Instance name} IE: JumboCorp \ CounterPoint

- Reply email: {yourmail@server.com}

- Email Server: smtp.gmail.com

- Port: 587

- Require secure connections: checked

- Enter the basic authentication username / password. Note that for GMail you’ll use your full email address

- Click Next

- Check Public Profile / Set Default Profile to Yes

- Click Next

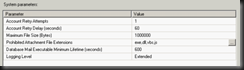

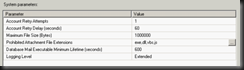

- Accept defaults for system parameters / Next

- Review / Finish / Close

To test, right click Database Mail, choose Send Test E-Mail.

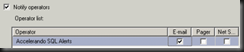

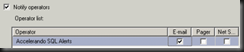

Setup Operators

- Right Click Operators under SQL Server Agent / New Operator

- Name: {Descriptive name for destination email address}

- E-mail name: {destinationemail@server.com}

- Save

Setup additional operators if other users wish to be notified of SQL Alerts

Setup Alerts

Enable Alerts in the SQL Agent

- Right click SQL Server Agent / Properties / Alert System

- Enable mail profile

- Mail system: Database Mail

- Mail Profile: Accelerando Notification

- Ok

- Restart the SQL Server Agent service

- (If notifications don't go out after a SQL Agent restart, you may also need to restart the SQL Service)

Setup Alerts - General Alerts

Run the this script against the Master database.

Enabling Alert Notification

After the alerts are created, you'll need to activate notification for each of them (one at a time)

- Right click the alert / Properties

- Response Tab / Notify operators: checked

- Check email for Accelerando SQL Alerts

- Click Options Tab / Include alert error in: Email (checked)

- Delay between responses: 180 (3 hours)

- Ok

Large Log File Alert

Additional alerts - these cannot be scripted because they depend on specific databases. You'll need to create an alert for each of the databases you wish to monitor.

- Right click Alerts under SQL Server Agent / New Alert

- Alert name: {Servername / Instance Name - DBNAME} DB - Large Log File Alert

- Type: SQL Server performance condition alert

- Object: SQL Server Databases

- Counter: Log File(s) Size (KB)

- Instance: Choose the DB to monitor

- Alert if counter: rises above

- Value: {Insert reasonable number here. For large databases 50000000 would be 50GB)

- Enable the email alert notifications as in the section above

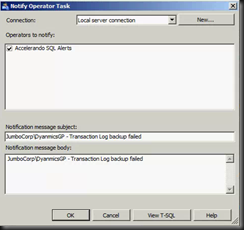

Maintenance Plan Success / Failure notification

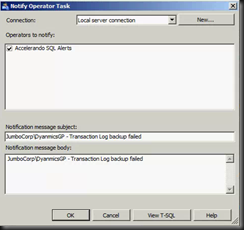

Open the maintenance plan to which you'd like to add notifications. You can add notifications to just the backup task or to multiple tasks. To add a notification, use the following steps:

- Grab the Notify Operator Task and drag it into the maintenance plan.

- From the step on which you'd like to receive the notification, grab an available component output and connect it to the Notify Operator Task. Right click on the component output and choose whether you'd like the notification to happen on success or failure.

- Edit the Notify Operator Task and check any operators that should be alerted in this notification

- In the Subject line, enter {Server\Instance} - {Action} Failed / Succeeded

- For example: JumboCorp\DynamicsGP - Transaction Log backup failed

- In the notification message, repeat the above line.

- Repeat the steps above for any other points in the plan that you'd like notification for.

- NOTE: If you want to receive both a success and failure notification, you'll need to add two separate Notify Operator Tasks

Testing / Debugging

Test Alert Level Notifications

Run the following script against the Master database. It should trigger an alert notification and email response.

RAISERROR('This is a test', 17, 1) WITH LOG

Checking Sent Mail status

Run the following script against the Master database. It will return a list of the emails that have been sent and show the status of each of the emails

SELECT * FROM msdb.dbo.sysmail_allitems

SELECT * FROM msdb.dbo.sysmail_event_log

If sent emails fail, test by installing an email client on that machine with the same configuration as above.

Incoming mail server: pop.gmail.com

Port: 995

Require SSL: Checked

You can download Windows Live Mail here -

http://explore.live.com/windows-live-essentials-other-programs?T1=t2

Only install the email client (live mail)

Resources

SQL Server 2008 : Database Mail - Using SQL Server Agent Mail

http://mscerts.programming4.us/sql_server/sql%20server%202008%20%20%20database%20mail%20-%20using%20sql%20server%20agent%20mail.aspx

Setup SQL Server 2008 Maintenance Plan Email Notifications

http://808techblog.com/2009/07/setup-sql-server-2008-maintena.html

SQL Server Alerts: Soup to Nut

http://www.simple-talk.com/sql/database-administration/sql-server-alerts-soup-to-nuts/

SQL Script - General Alerts

Requirements

DBMail activated

Run against Master DB

Script

USE [msdb]

GO

/****** Object: Alert [Alert Level 17 - Insufficient Resources] Script Date: 11/16/2011 12:20:18 ******/

EXEC msdb.dbo.sp_add_alert @name=N'Alert Level 17 - Insufficient Resources',

@message_id=0,

@severity=17,

@enabled=1,

@delay_between_responses=10800,

@include_event_description_in=1,

@category_name=N'[Uncategorized]',

@job_id=N'00000000-0000-0000-0000-000000000000'

GO

/****** Object: Alert [Alert Level 18 - Nonfatal Internal Error] Script Date: 11/16/2011 12:20:18 ******/

EXEC msdb.dbo.sp_add_alert @name=N'Alert Level 18 - Nonfatal Internal Error',

@message_id=0,

@severity=18,

@enabled=1,

@delay_between_responses=10800,

@include_event_description_in=1,

@category_name=N'[Uncategorized]',

@job_id=N'00000000-0000-0000-0000-000000000000'

GO

/****** Object: Alert [Alert Level 19 - Fatal Error in Resource] Script Date: 11/16/2011 12:20:18 ******/

EXEC msdb.dbo.sp_add_alert @name=N'Alert Level 19 - Fatal Error in Resource',

@message_id=0,

@severity=19,

@enabled=1,

@delay_between_responses=10800,

@include_event_description_in=1,

@category_name=N'[Uncategorized]',

@job_id=N'00000000-0000-0000-0000-000000000000'

GO

/****** Object: Alert [Alert Level 20 - Fatal Error in Current Process] Script Date: 11/16/2011 12:20:18 ******/

EXEC msdb.dbo.sp_add_alert @name=N'Alert Level 20 - Fatal Error in Current Process',

@message_id=0,

@severity=20,

@enabled=1,

@delay_between_responses=10800,

@include_event_description_in=1,

@category_name=N'[Uncategorized]',

@job_id=N'00000000-0000-0000-0000-000000000000'

GO

/****** Object: Alert [Alert Level 21 - Fatal Error in Database Processes] Script Date: 11/16/2011 12:20:18 ******/

EXEC msdb.dbo.sp_add_alert @name=N'Alert Level 21 - Fatal Error in Database Processes',

@message_id=0,

@severity=21,

@enabled=1,

@delay_between_responses=10800,

@include_event_description_in=1,

@category_name=N'[Uncategorized]',

@job_id=N'00000000-0000-0000-0000-000000000000'

GO

/****** Object: Alert [Alert Level 22 - Fatal Error: Table Integrity Suspect] Script Date: 11/16/2011 12:20:19 ******/

EXEC msdb.dbo.sp_add_alert @name=N'Alert Level 22 - Fatal Error: Table Integrity Suspect',

@message_id=0,

@severity=22,

@enabled=1,

@delay_between_responses=10800,

@include_event_description_in=1,

@category_name=N'[Uncategorized]',

@job_id=N'00000000-0000-0000-0000-000000000000'

GO

/****** Object: Alert [Alert Level 23 - Fatal Error: Database Integrity Suspect] Script Date: 11/16/2011 12:20:19 ******/

EXEC msdb.dbo.sp_add_alert @name=N'Alert Level 23 - Fatal Error: Database Integrity Suspect',

@message_id=0,

@severity=23,

@enabled=1,

@delay_between_responses=10800,

@include_event_description_in=1,

@category_name=N'[Uncategorized]',

@job_id=N'00000000-0000-0000-0000-000000000000'

GO

/****** Object: Alert [Alert Level 24 - Fatal Error: Hardware Error] Script Date: 11/16/2011 12:20:19 ******/

EXEC msdb.dbo.sp_add_alert @name=N'Alert Level 24 - Fatal Error: Hardware Error',

@message_id=0,

@severity=24,

@enabled=1,

@delay_between_responses=10800,

@include_event_description_in=1,

@category_name=N'[Uncategorized]',

@job_id=N'00000000-0000-0000-0000-000000000000'

GO

/****** Object: Alert [Alert Level 25 - Fatal Error] Script Date: 11/16/2011 12:20:19 ******/

EXEC msdb.dbo.sp_add_alert @name=N'Alert Level 25 - Fatal Error',

@message_id=0,

@severity=25,

@enabled=1,

@delay_between_responses=10800,

@include_event_description_in=1,

@category_name=N'[Uncategorized]',

@job_id=N'00000000-0000-0000-0000-000000000000'

GO

![closemaps3[1] closemaps3[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiysK9T50GY8vTf32SoZSEaOVWOrUYpszPKBZca9CUw4cjh_zfmzJ7paYZMemmoGYUunr3adOEAJ4LzF3jlSQt7TCfPQrq6N2ZxgkK8_jj-WWfUOMXwBRoddEvqE5fi6TznJsfM9T9VUaw/?imgmax=800)

![DataBaseSmall[1] DataBaseSmall[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiVB9g9fy2FYgb8rUTdIAMFipAVcQ68qKt6IXw-GepeOUFS_NQ6nEcPYnQIbYtmMSghYNLhMEc1hCLH_w6C1oqwCvVsJ6Re3TKKWQbUam6uTezP_1uVqPasgXsqEkgqmuFGbKqHMXjJUiA/?imgmax=800)